Deep dive into Layla Agents

- Layla

- Oct 1

- 5 min read

If you haven't already, please read a brief overview on how Agents work in Layla here: https://www.layla-network.ai/post/how-to-enable-agents-functions-and-tool-calling-in-layla

This article will dive deeper into Layla's Agentic Features.

Agent Internals

Agents are self-contained workflows that executes when needed during chatting with the LLM. Each Agent has a trigger, which triggers on specific configurable conditions, and a list of tools which will be executed consecutively.

The Agent result is injected into the conversation as context, and the LLM will pick up on that and give you a contextualised response!

Triggers

There are many types of triggers in Layla. The following image shows a few:

Intent - Layla runs intent classification on your input and triggers this agent depending on what intent is detected. There are numerous intents built into the classifier, e.g. "search news", "query weather", "set alarm", "set calendar", etc. The full list can be viewed by selecting the dropdown after an Intent Trigger is added

Regex - The agent is triggered when your input regex is matched within the chat message. The matched string is the input of the first tool.

Phrase - The agent is triggered when the entered phrase is detected in the message (case-insensitive). The matched phrase is the input of the first tool.

Date/Time Detected - The agent is triggered if a date or time is detected within the message. The detected date/time is the input of the first tool.

MCP/Layla Tool Trigger - this is a more advanced feature which we will cover in our next article! (Link)

Is Voice Mode - a simple trigger that is triggered when Voice Mode is turned on

These triggers are run on each input and output message in your chats. When a trigger is activated, the agent will start, and the trigger condition is used as the input to the first tool.

Tools

The heart of Agents in Layla is Tools.

Tools perform functions, whether it be calling external services, operating your phone, and much more. Layla comes built in with numerous tools, and more are too be added constantly!

There are too many tools to go over in this article, but we will highlight some tools that are commonly used.

In the Agents mini-app, scroll down to see a list of available tools Layla can use. Tapping on one will bring up a popup more details. Let's take the HTTP Request tool as an example:

The HTTP Request tool has several parameters which you can configure. These can be hard-coded (for example, specifying a particular URL for this Agent to call), or they can be generated by the LLM (more details below).

After adding a tool, you will be able to configure its parameters in the editing Agents popup. As shown in the previous article, you can specify the URL by typing in the input.

The output of each tool is used as the input for the next. This way, you can chain multiple tools together in one Agent. In the example above, the output of the HTTP Request tool is the raw string returned after calling the URL with the configured parameters.

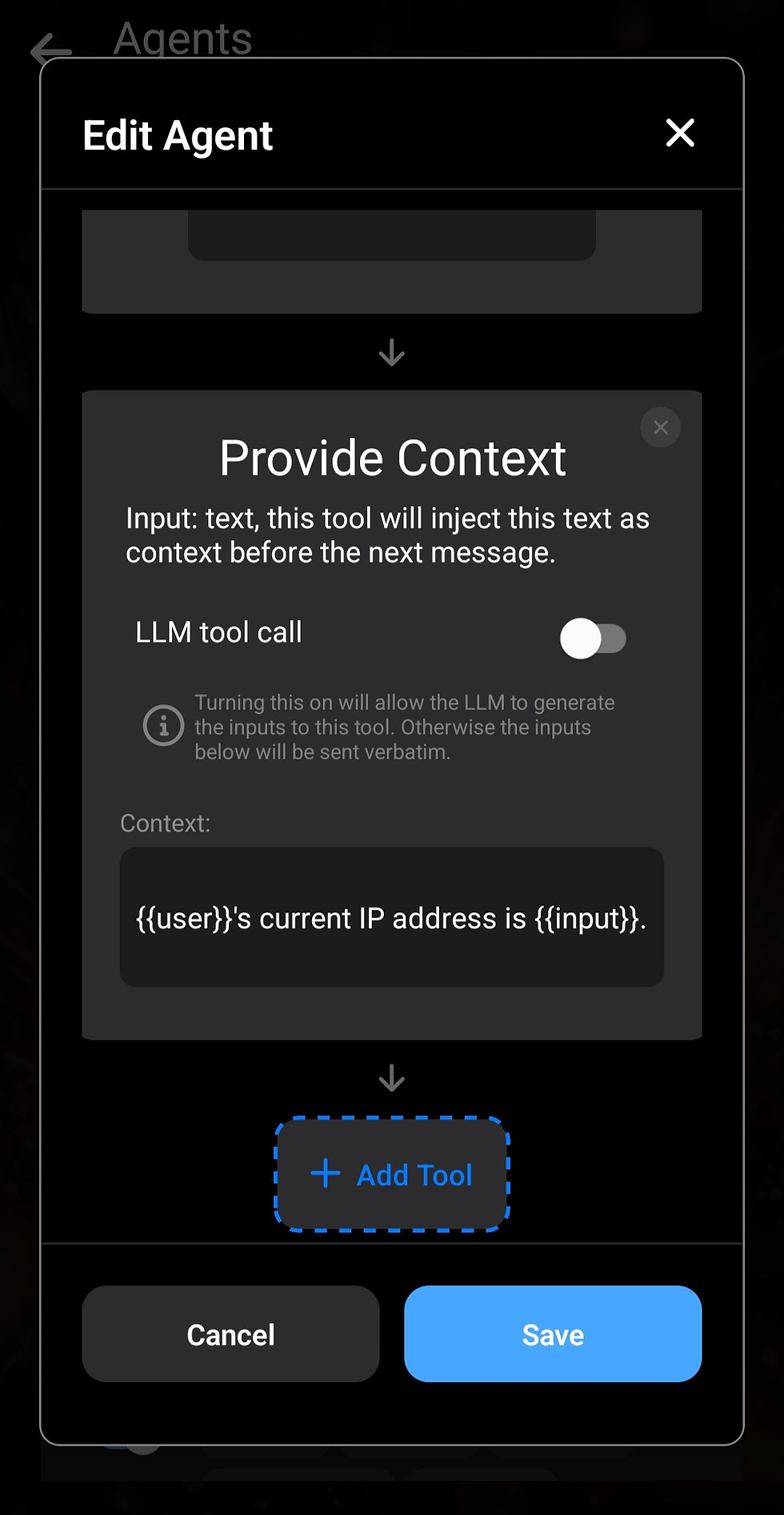

As introduced in the previous article, the Provide Context tool is a critical tool which puts the final output of the Agent into the LLM's context. This is the tool which will give your LLM grounded results after agent execution.

Testing Agents

After you've created your Agent, you may want to test them. It's easy to do so in Layla by using the Test Agent button in the list of Agents. Testing the Agent will also give you visibility on the inputs/outputs of each step, so you can understand how Agents work better.

Let's use the "What's My IP?" Agent as an example:

You can see the tool first makes an HTTP request to https://api.ipify.org

The output of the HTTP Request is shown (which is your IP in plain text).

The output is then passed to the Provide Context tool, which formats it as a contextual message for the LLM.

The contextual message can be configured in the tool itself. In this example:

Note the usage of templates here (the double curly brackets such as {{input}}). The {{input}} template is the special template in which the text will be replaced with the input to this tool.

In the above example, the output of the HTTP request is the input of the Provide Context tool, so after replacing, the output will be: {{user}}'s current IP address is xx.xx.xx.xx

When injected into the conversation, the {{user}} template is further replaced by your selected persona (this operates the same as custom prompts).

LLM Generated Parameters

The more eagle-eyed of you may have noticed that up until now, we have always hard-coded our parameters for each tool. The most we have done is use our matched inputs as parameters.

The true power of LLM integrated Agents comes from the fact that we can ask the LLM to generate inputs of our tools. This gives us flexibility whilst taking advantage of the natural language capabilities of the LLM.

A common scenario is asking our LLM to "search the web" for something. We don't want to input all of our message as the search keywords. The LLM should be able to take your message and the contextual clues in your conversation to search the web with intelligent keywords.

Another scenario is you want to ask the LLM to draft an email for you. You would say something like "draft an email to my co-worker reminding him of our meeting". You would want the LLM to generate the email body, and open your email app with the body pre-filled.

We can do this with Layla:

We take the "Send Email" Agent as an email. The "Send Email" tool has two parameters: "Subject" and "Message". You will notice we turned the option LLM tool call -> ON.

This will tell the LLM to generate the contents of these parameters. So when this agent is triggered, the LLM will generate the parameters for the email subject and body, then execute the tool, which will open your email client with the necessary information!

With the LLM tool call option ON, you can input natural language instructions in the parameter fields. This tells the LLM what each field is for in natural language. The LLM will understand your instruction and generate the appropriate inputs based on your conversation context for each of the fields.

A more complicated example can be seen in the Schedule Event Agent: it has many parameters, and each parameter is explained in detail to the LLM.

Comments